After our recent PR monitoring blog post got some extra attention, I thought I ought to make a post about the tool itself, how it works, its limitations and what may be missing at the moment.

This post will be updated as our monitoring evolves, and will serve as a reference to answer questions on the data collection and the meaning of some of the things we share.

TL;DR – The tool is not perfect. Some games are much harder to track than others. We constantly try to improve on all fronts and are very aware of the current weaknesses of the system.

The Media Monitor

That’s how the tool is called internally. It has evolved from the tool set that we used internally to support the work PR team carries out for our clients. Despite the first version being about a year old, a lot of the features have only been developed recently as it is not a core partof our day-to-day work. Having the tool working for that long though has proved very useful in identifying seasonal patterns (or the lack of), but we are still limited in the games we are properly tracking.

So far we have about 6,000 websites in our database (across 28 languages) – the Media Monitor is currently tracking about 3,500 of those websites.

For those websites that are not tracked, it is because of different reasons. Some websites we don’t track because it would be too complicated technically (or rather, we are using the method that allows us to track the most sites at the same time at the moment). Some websites we don’t track because we have a few bugs we need to iron out. And finally, some websites we decided not to track at all because of the nature of their content.

Every single website in the database is qualified – we keep the Alexa ranking, the language it is written in, and give every site a “media type” to allow us to analyse further the results of the work that is done. We have removed from the Media Monitor two types of websites: content farms (websites that have zero original content and generally publish automated reposts other websites’ content) and fansites (website dedicated to one or a few very specific games and don’t treat any others). In the first few months of monitoring we found such sites really skewed the results we were getting and so they had to go.

The trackers

We currently have more than 950 individual trackers (also referred to as alerts sometimes). Each of these trackers is doing a full text search for key words. We have trackers for a lot of different things at the moment:

- Specific games

- Game franchises

- Hardware devices

- Video game companies

- Personalities

- Events

- Weird, ultra specific things that we want to track individually for whatever reason

90% of the trackers work very efficiently. They don’t return many false positives (ie: articles that use key words but on an unrelated subject) and they don’t miss much of the relevant articles.

Some trackers require us to be a little clever however. Let’s explain using the example of Thief.

Most games that are problematic are using one commonly used word. In this, on top of looking for the word Thief, we added a number of other words that need to be present in order to create a positive hit. It looks a bit like this (slightly simplified):

You NEED the word “thief” and you NEED one of the following term: xbox OR playstation OR steam

That tracker was performing very well until E3, then Uncharted 4: A Thief’s End was announced and threw up a problem.

We had to recreate the tracker for Thief as follows (this is a simplified version of it again):

You NEED the word “thief”, you NEED one of the following term: xbox OR playstation OR steam, you CANNOT have the word “uncharted”.

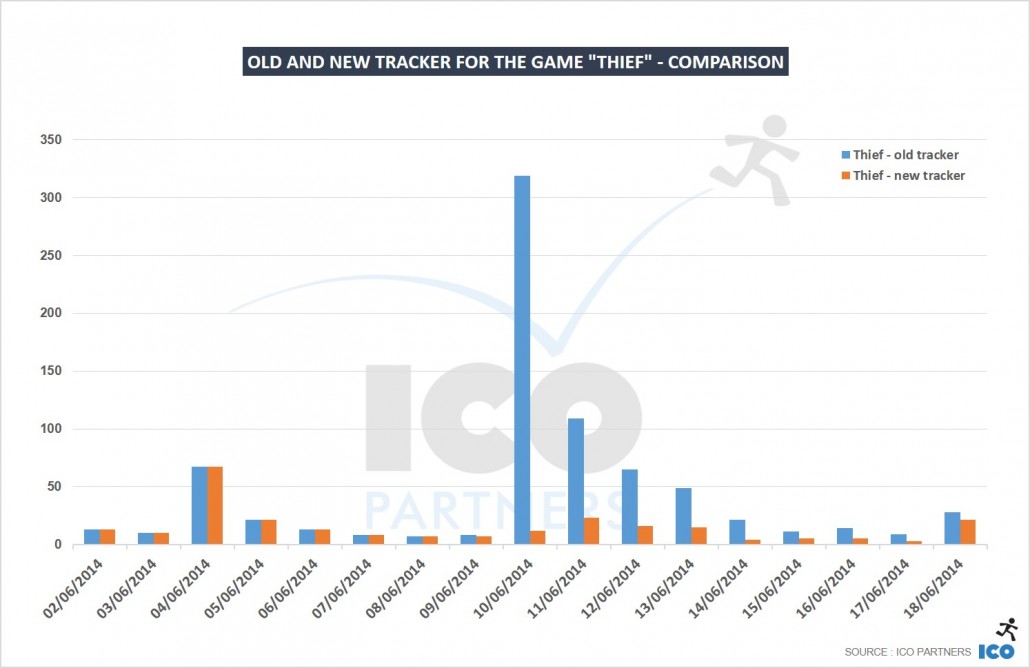

This is a chart showing the before and after of that tracker:

We keep a very close eye on the behaviour of the trackers, trying to keep them as relevant as possible and avoiding false positive at the same time.

Quality versus Quantity

Currently (and for the foreseeable future), the Media Monitor is only providing quantitative data. That in itself has already been very helpful to us. We see trends for what are the news the most likely to be picked up, it allows to get some benchmark on the different countries we are working on (we do PR across all of Europe, not an easy task).

We are also very aware of the questions we cannot answer with this data – but we would rather have half the answers than none at all.

Please let me know if you have questions, I will use them to update this post and clarify the notions presented here.

Trackbacks & Pingbacks

[…] core of the methodology is the same, its fundamentals have not changed. However, we are now parsing even more websites, and […]

[…] core of the methodology is the same, its fundamentals have not changed. However, we are now parsing even more websites, and […]

[…] core of the methodology is the same, its fundamentals have not changed. However, we are now parsing even more websites, and […]

[…] coverage. If this the first time you read about our analysis, you can find out more about the methodology used, and read the blog posts for 2015, 2016, and […]

[…] But, what do you think of the overall press coverage for E3 2017? Comment down below and lets us know whether or not it makes sense to you. And if it doesn’t, you can discover ICO Partner’s method of ranking right here. […]

[…] the show, as per tradition. If this the first time for you, please fee free to have a look at the methodology used, and read the blog posts for 2015 and […]

[…] last year, bear in mind that we are using our internal tool to collect these numbers, and understanding the methodology is important. It is particularly worth mentioning this year […]

[…] usual disclaimers from the articles on the media monitor apply, but on top of this, I need to remind everyone that we […]

[…] As usual, you can read about the tool and methodology here. […]

[…] websites and shows us how individual games perform in the press (for more info on how it works look here). For The Lion’s Song, we took advantage of the tool and looked into data from other episodic […]

[…] period. It is very unusual to have such a perfect score, especially considering the tool has it own flaws, and it would tend more towards missing mentions rather than finding false positives. VR as a topic […]

[…] looked into our coverage tracking tool (more info on the tracking method here) to see how well Pokémon Go is doing in terms of press coverage. To make the coverage of the only […]

[…] media coverage of the events, as is now traditional. If you are new to them, I invite you to first check on the methodology that we use, and then to have a read of last year’s […]

[…] Let’s look at the number of articles first. As a reminder, I am using our media monitoring tool for this. […]

[…] to how many articles they generated in media. For more info on the tracking method please read this entry. Please also note that this selection excludes several jokes – such as Hearthstones fake MMO […]

[…] ai dati di IcoPartners, è così emerso che i giochi a cui sono stati dedicati più articoli sono stati […]

[…] As usual, if you are not familiar with the way the data is collected, I invite you to read the blog post on the topic. […]

[…] Listed among as my 4th objective for stretch goals at the beginning is to keep the presence of a campaign in the media. This is incredibly difficult, and to illustrate this, I went and looked at the Bloodstained campaign in our media monitor. […]

[…] went into the media monitor tool, and ran some numbers. All the data below is based on one year of media tracking (from the July […]

[…] usual, if you are not familiar with the way the data is collected, I invite you to read the blog post on the topic. For the purpose of this article, I have only looked at the data from articles published during […]

[…] * Methodology reminder: For more details on the methodology and the way the tool we are using is working, check the dedicated blog post. […]

[…] back fresh from GDC, I thought I would do a rapid follow-up using the Media Monitor and see how well the event performed with regards to the prevalent topics in media coverage. And […]

[…] should do one as the information should still have value. As usual, I encourage you to check the previous blog post explaining how the monitoring tool works. This time though, I have a couple of things to further […]

[…] media landscape with several in-house developed tools (for more details how the tools works click here). This way we could take a closer look on how the PlayStation Experience was picked up by European […]

[…] I just came back from Korea (and the very well attended Gstar in Busan) and trying to catch up on the monthly check on media mentions of video games. Let’s have a look at October and November. You can find more about the monitoring tool in the previous blog post. […]

[…] Going back to the monthly check on media mentions of video games, let’s have a look at both August (I neglected last time to check on specifically the impact of gamescom) and September. You can find more about the monitoring tool in the previous blog post. […]

Leave a Reply

Want to join the discussion?Feel free to contribute!